In a move that has put a spotlight on the growing tension between government oversight and the tech industry, U.S. Federal Trade Commission (FTC) Commissioner Rebecca Slaughter has publicly raised questions about the status of a complaint against Snap’s “My AI” chatbot. The Snap AI chatbot complaint, which alleges potential “risks and harms” to young users, was referred by the FTC to the Department of Justice (DOJ) earlier this year. However, with no public action taken since, Slaughter’s comments on CNBC’s “The Exchange” have brought the case back into the public eye, highlighting a regulatory process that she believes is shrouded in too much secrecy. “The public does not know what has happened to that complaint,” Slaughter stated. “And that’s the kind of thing that I think people deserve answers on.”

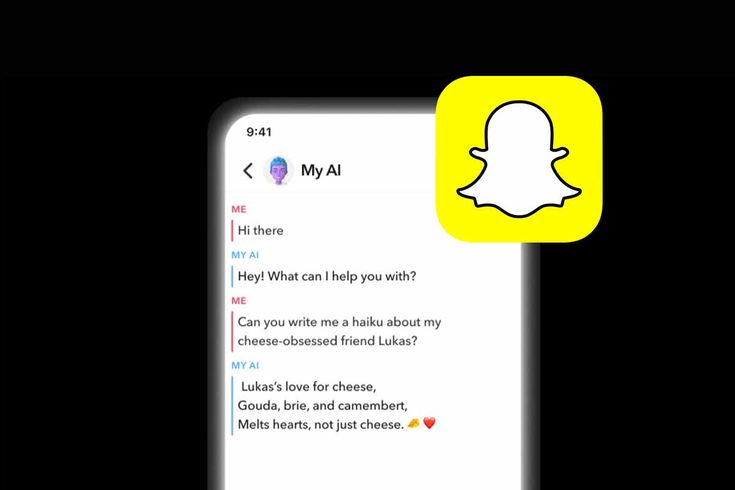

This public call for transparency is more than a procedural question; it’s a reflection of a wider debate about the regulation of artificial intelligence, particularly when it comes to the safety of minors. The “My AI” chatbot, which launched in 2023, is powered by advanced large language models from leading AI developers like OpenAI and Google. While these models can provide helpful and engaging conversations, they have also drawn scrutiny for problematic responses, including the potential to provide inappropriate advice or misinformation. The FTC’s referral of the Snap AI chatbot complaint to the DOJ underscored the seriousness of the allegations, hinting at a potential legal or even criminal dimension to the concerns.

Allegations of Risk: A Broader Concern for AI Safety

The core of the Snap AI chatbot complaint revolves around the “risks and harms” that the AI might pose to young users. While the specific details of the non-public complaint are not available, regulatory and child safety advocates have raised alarms about several key issues related to generative AI. One major concern is the potential for AI chatbots to generate inappropriate, sexually suggestive, or harmful content when interacting with minors. The technology’s ability to engage in prolonged, seemingly human-like conversations can also lead to emotional manipulation or the sharing of sensitive personal information. Furthermore, there are worries that these AI chatbots could serve as a platform for bullying or could be exploited by malicious actors to target young users.

The complaint against Snap is part of a larger, global conversation about the lack of robust safety guardrails for AI. Regulators around the world are struggling to keep pace with the rapid innovation in the sector. The Snap case could set a crucial precedent for how government agencies handle allegations of AI-related harm. Commissioner Slaughter’s public statement highlights her concern that without transparent follow-through, the referral could be seen as a mere symbolic gesture rather than a genuine effort to protect consumers, especially children.

Political and Regulatory Conflict at the Highest Levels

The Snap AI chatbot complaint is unfolding against a backdrop of significant political and institutional tension. Commissioner Slaughter’s remarks came just a day after President Donald Trump held a private dinner with several prominent tech executives, including the CEOs of Google, Meta, and Apple. The timing was not lost on her, as she noted the irony of the White House hosting Big Tech leaders even as “we’re reading about truly horrifying reports of chatbots engaging with small children.” This juxtaposition underscores the complex relationship between the government and the tech industry, where companies are simultaneously allies on some fronts and targets of regulatory action on others.

The political dimension extends even within the FTC itself. FTC Chair Andrew Ferguson, who was selected by President Trump, publicly opposed the initial complaint against Snap. This internal dissent within the commission is highly unusual and adds another layer of complexity to the case. Ferguson’s comments, which he made before taking the top role at the FTC, suggested that he viewed the complaint as an “affront to the Constitution and the rule of law” if the DOJ were to proceed. This division within the agency raises questions about the FTC’s ability to take a unified stance on crucial AI safety issues and suggests that the future of AI regulation could be subject to deep political polarization.

The political conflict has also touched Commissioner Slaughter herself. President Trump has been attempting to remove her from her position, a move that was recently challenged in a U.S. appeals court, which allowed her to maintain her role. The President has since appealed to the Supreme Court. This ongoing legal battle further politicizes the environment in which the Snap AI chatbot complaint is being considered, making a resolution less about the merits of the case and more about the power dynamics within the government.

The Broader Implications for AI Regulation

The case against Snap is a microcosm of the larger challenge facing governments worldwide: how to regulate AI responsibly and effectively. The technology is developing at a breakneck pace, and existing laws, which were designed for an analog or a pre-AI digital world, are often inadequate. A key question at the heart of the Snap AI chatbot complaint is whether an AI, or the company that deploys it, can be held legally liable for the harms it causes. The FTC’s referral to the DOJ indicates that the agency believes there are grounds for a serious legal review, but the lack of a formal complaint from the DOJ leaves the question unanswered.

This case will be watched closely by other tech companies and regulators alike. A clear and decisive action by the DOJ could set a powerful precedent, encouraging companies to implement stricter safety measures and leading to a new era of proactive AI regulation. Conversely, a lack of action could be seen as a green light for companies to push the boundaries of AI deployment without fear of legal consequences. Commissioner Slaughter’s public plea is, therefore, not just about one specific case but about shaping the future of AI governance.

In conclusion, the Snap AI chatbot complaint is a critical test case for the regulation of AI. It brings together complex legal, ethical, and political issues, from the safety of young users to the balance of power between government agencies and the tech industry. Commissioner Slaughter’s public demand for transparency has ensured that this case will not fade into obscurity, reminding us that in the age of rapid AI development, the public deserves answers and accountability.