Roblox child safety!!! Roblox, the immensely popular online platform where users can create and play their own 3D games, is embarking on a new phase of innovation with the introduction of short-video and advanced AI features. These new tools are designed to empower creators and enhance user engagement. Yet, their launch comes at a time of escalating public and legal scrutiny over the company’s ability to protect its young user base. This presents a complex and high-stakes dilemma for the platform: how to continue pushing the boundaries of technology without compromising the safety of millions of children who log in daily. The ongoing debate over Roblox child safety is a perfect illustration of the broader challenges facing the entire tech industry as it grapples with its responsibility to protect its most vulnerable users.

The new features, announced on Friday, represent a significant evolution for the platform. The first is “Roblox Moments,” a new short-video experience that allows users aged 13 and older to create and share video clips of their gameplay. These clips, which can be shared on a dedicated feed within the platform, are reminiscent of popular short-form video apps, aiming to keep users engaged by providing a new way to discover content. The second major addition is a suite of AI-powered tools that allow users to generate interactive 3D objects for the games they build. Users will be able to provide simple text prompts, such as “futuristic monster truck,” and the AI will generate a functional, ready-to-use asset that fits the aesthetic of their game. These features are designed to democratize content creation, enabling even smaller teams and solo developers to build more complex and dynamic experiences.

The Lawsuits and Allegations

While Roblox pushes forward with its technological advancements, it is simultaneously fighting a number of lawsuits and facing intense pressure from lawmakers. The core of these legal challenges is the allegation that Roblox has failed to implement robust safety protocols, creating an environment that enables predators to exploit underage victims. A recent lawsuit filed by the Louisiana Attorney General, Liz Murrill, specifically alleges that the company has “knowingly enabled and facilitated the systemic sexual exploitation and abuse of children” and has failed to warn parents about the dangers.

The lawsuits cite alarming statistics and instances, painting a picture of a platform that is not doing enough to prevent inappropriate interactions. These allegations raise fundamental questions about the company’s business model, which some critics argue prioritizes user growth and revenue over child protection. In a public statement, Roblox has pushed back on these claims, asserting that “any assertion that Roblox would intentionally put our users at risk of exploitation is simply untrue.” However, the legal and regulatory pressure continues to mount, highlighting a stark contrast between the company’s internal rhetoric and the external reality it faces.

The Challenge of Moderation and Age Verification

In response to the growing concerns, Roblox has emphasized its commitment to Roblox child safety and has detailed the extensive moderation efforts it is putting in place for the new features. The company has stated that it will moderate every video shared on “Moments” and will allow users to flag inappropriate content. Similarly, the AI-generated creations will also be subject to moderation. These measures are critical for maintaining a safe environment, but they are incredibly complex to scale given the platform’s massive user base and the speed at which user-generated content is created.

The issue of age verification is at the heart of the Roblox child safety debate. The platform’s policy of allowing users to self-report their age has long been a point of criticism, as it can allow predators to pose as minors or for children to access age-inappropriate content. In response, Roblox recently announced an expansion of its age estimation program. This program, which uses AI to analyze a video selfie to estimate a user’s age, is a proactive step to more accurately verify ages and provide a safer, more tailored experience. However, even this technology is not foolproof and raises new questions about data privacy and the potential for misuse.

The challenge is to strike a delicate balance between giving users the creative freedom they crave and implementing strong, effective safety measures. As more countries introduce stricter online safety laws, companies like Roblox will be under increasing pressure to prove that their systems are truly effective. The Louisiana lawsuit and the broader regulatory scrutiny serve as a potent warning that public perception and legal action can have a direct impact on a company’s reputation and its stock performance. The case against Roblox is a powerful reminder that in the modern digital landscape, innovation and accountability are inextricably linked.

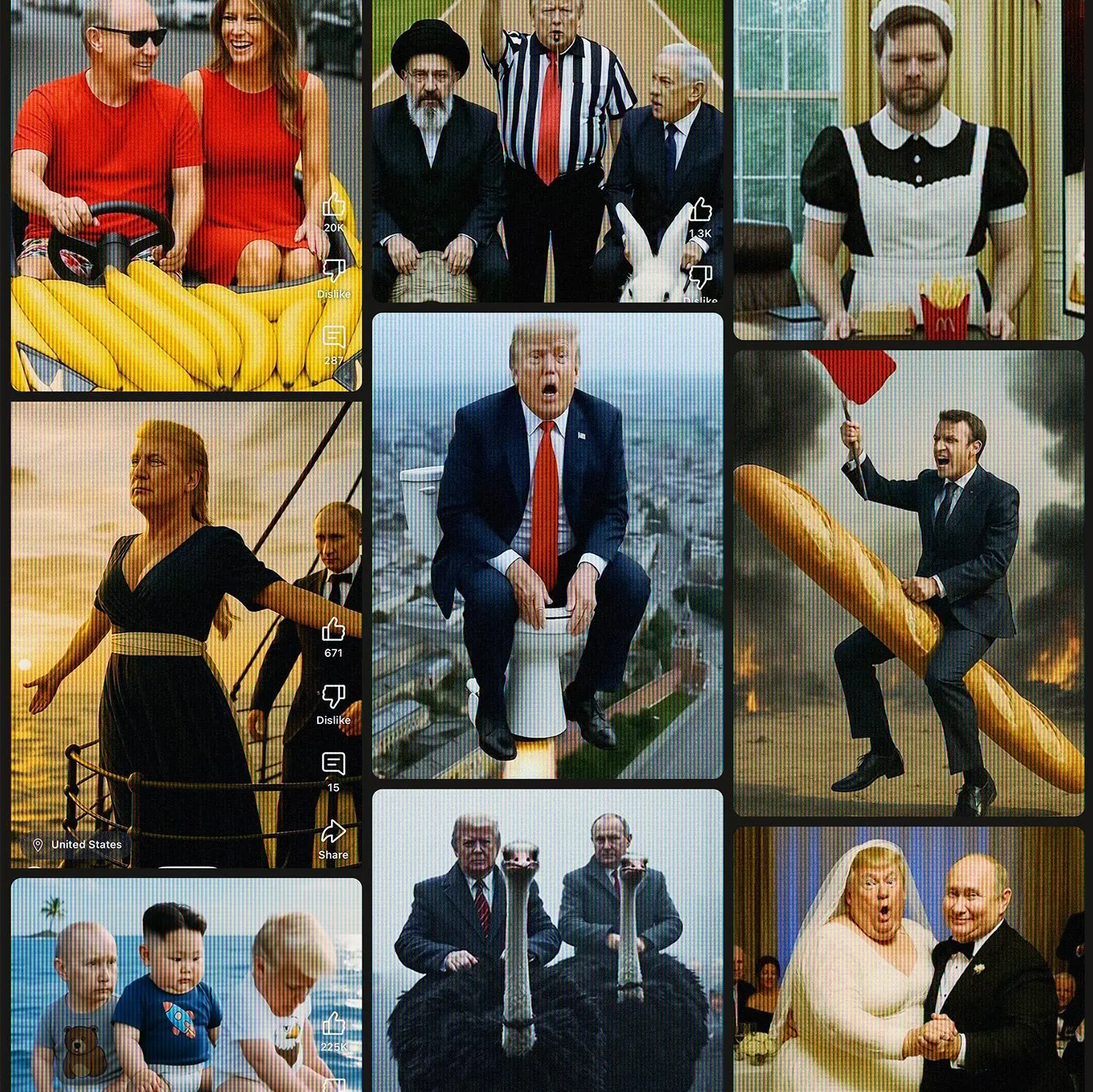

This is a video that goes more in-depth into the child safety concerns on the platform. Lawsuit claims Roblox is failing to protect kids from online predators.

Source:

CNBC