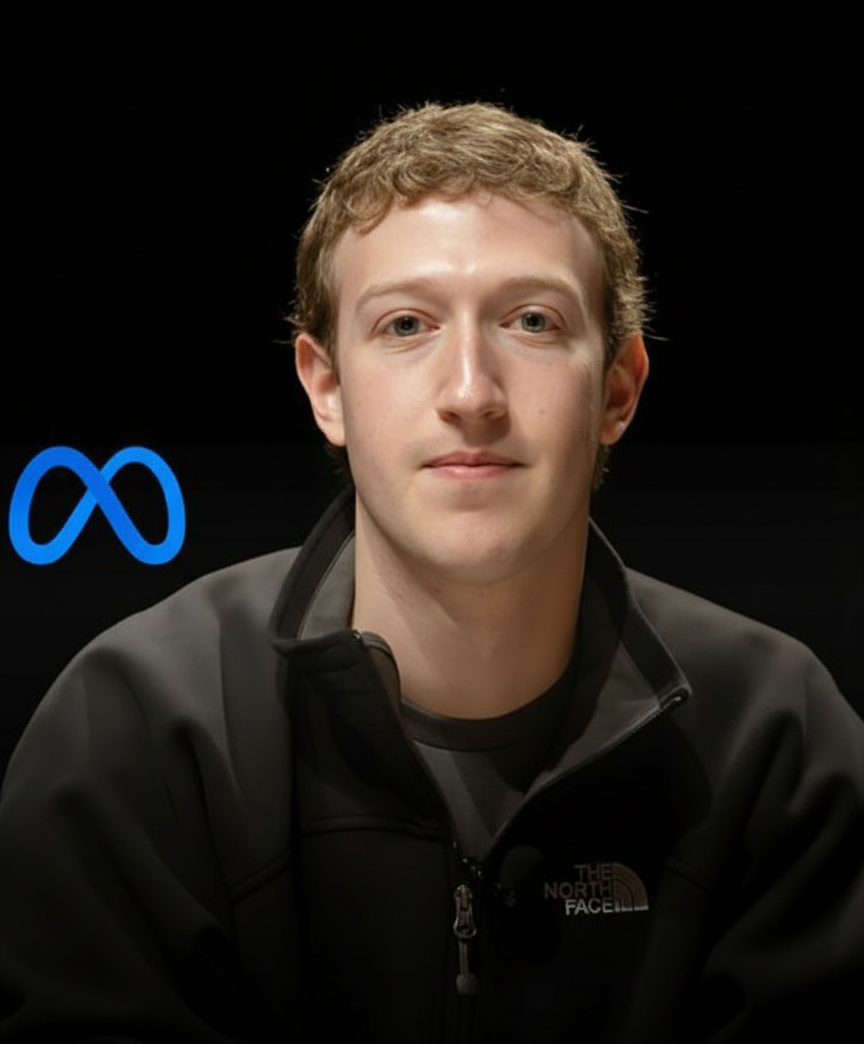

Mark Zuckerberg social media trial has become one of the most closely watched legal battles in the tech world. The Facebook founder and Meta CEO has been ordered to appear in person before the Los Angeles County Superior Court, as part of a landmark case examining how social media affects young people’s mental health.

The decision, handed down by Judge Carolyn Kuhl, rejected Meta’s argument that an in-person testimony from Zuckerberg was unnecessary. The order also applies to Snap CEO Evan Spiegel and Instagram chief Adam Mosseri, marking the first time that multiple top social media executives will face direct questioning over the alleged harms caused by their platforms.

The Trial and Its Significance

The trial, expected to begin in January 2025, is among the first major cases to progress from hundreds of lawsuits filed by parents and school districts across the United States. These lawsuits accuse major tech companies—including Meta (owner of Facebook and Instagram), Snap, TikTok, and YouTube—of designing addictive platforms that exploit psychological vulnerabilities in children and teens.

According to court filings, the plaintiffs argue that the companies knowingly created features such as endless scrolling, push notifications, and “likes” that trigger dopamine responses, keeping users hooked while exposing them to harmful content.

The lawsuits were consolidated into a single large-scale case in 2022 under the Los Angeles County Superior Court. The core allegation: social media giants prioritized profit over safety, failing to strengthen parental controls or limit harmful exposure.

Meta and Snap’s Response

Meta and Snap have denied wrongdoing. In filings, the companies argued that Section 230 of the Communications Decency Act, a law passed in the 1990s, shields them from liability for content shared by users. They maintain that the responsibility for harmful posts lies with users, not the platforms themselves.

However, Judge Kuhl ruled that the companies must still face claims of negligence and personal injury related to the design of their apps—not just the content. The court found sufficient grounds to proceed with allegations that the platforms’ architecture itself was harmful and addictive.

Meta argued that both Zuckerberg and Mosseri had already provided depositions and that additional appearances would “interfere with business operations.” The judge disagreed, writing that a CEO’s firsthand testimony was “uniquely relevant.”

Why Zuckerberg’s Testimony Matters

Judge Kuhl emphasized that Zuckerberg’s direct testimony could reveal whether Meta executives were aware of social media’s harmful effects on youth—and whether they chose not to act to protect their bottom line.

“The testimony of a CEO is uniquely relevant,” Judge Kuhl wrote, adding that their “knowledge of harms, and failure to take available steps to avoid such harms” could help determine corporate negligence.

This decision underscores growing judicial scrutiny of social media leadership and could set a precedent for future litigation.

Wider Industry Implications

The case extends far beyond Meta and Snap. Tech titans TikTok and YouTube (owned by Alphabet) are also named in parallel lawsuits making nearly identical claims. Together, these cases represent one of the most comprehensive legal challenges ever mounted against the social media industry.

Law firm Beasley Allen, which leads part of the plaintiffs’ coalition, praised the court’s decision. “We are eager for trial to force these companies and their executives to answer for the harms they’ve caused to countless children,” a spokesperson said.

Snap’s legal team at Kirkland & Ellis responded by saying the ruling does not determine the truth of the allegations, expressing confidence that they will prevail in court.

Growing Political and Legal Pressure

This trial comes amid mounting political scrutiny of how digital platforms impact mental health. Over the past year, congressional hearings and bipartisan bills have called for tighter controls on social media usage by minors.

When testifying before Congress in 2024, Mark Zuckerberg defended Meta’s efforts, insisting that his company takes youth safety seriously. “The existing body of scientific work has not shown a causal link between using social media and young people having worse mental health,” he told lawmakers.

Meta has since rolled out measures like “teen accounts” on Instagram, introducing features that restrict mature content and limit screen time. Earlier this month, the company expanded these protections by adding stricter parental settings and an AI-powered filter system modeled on movie ratings.

The Bigger Picture: Social Media on Trial

The Mark Zuckerberg social media trial is about more than one company—it represents a pivotal moment for digital accountability. If the court finds that Meta and other platforms intentionally designed addictive systems despite knowing the risks, it could reshape the future of app design and tech regulation.

Experts believe that a ruling against these companies could force stricter design ethics, stronger youth protection standards, and even new federal laws redefining corporate responsibility online.

For Zuckerberg, Spiegel, and Mosseri, the January 2025 trial could prove defining—not just for their leadership reputations, but for how society regulates the next era of social media.

Source: BBC News